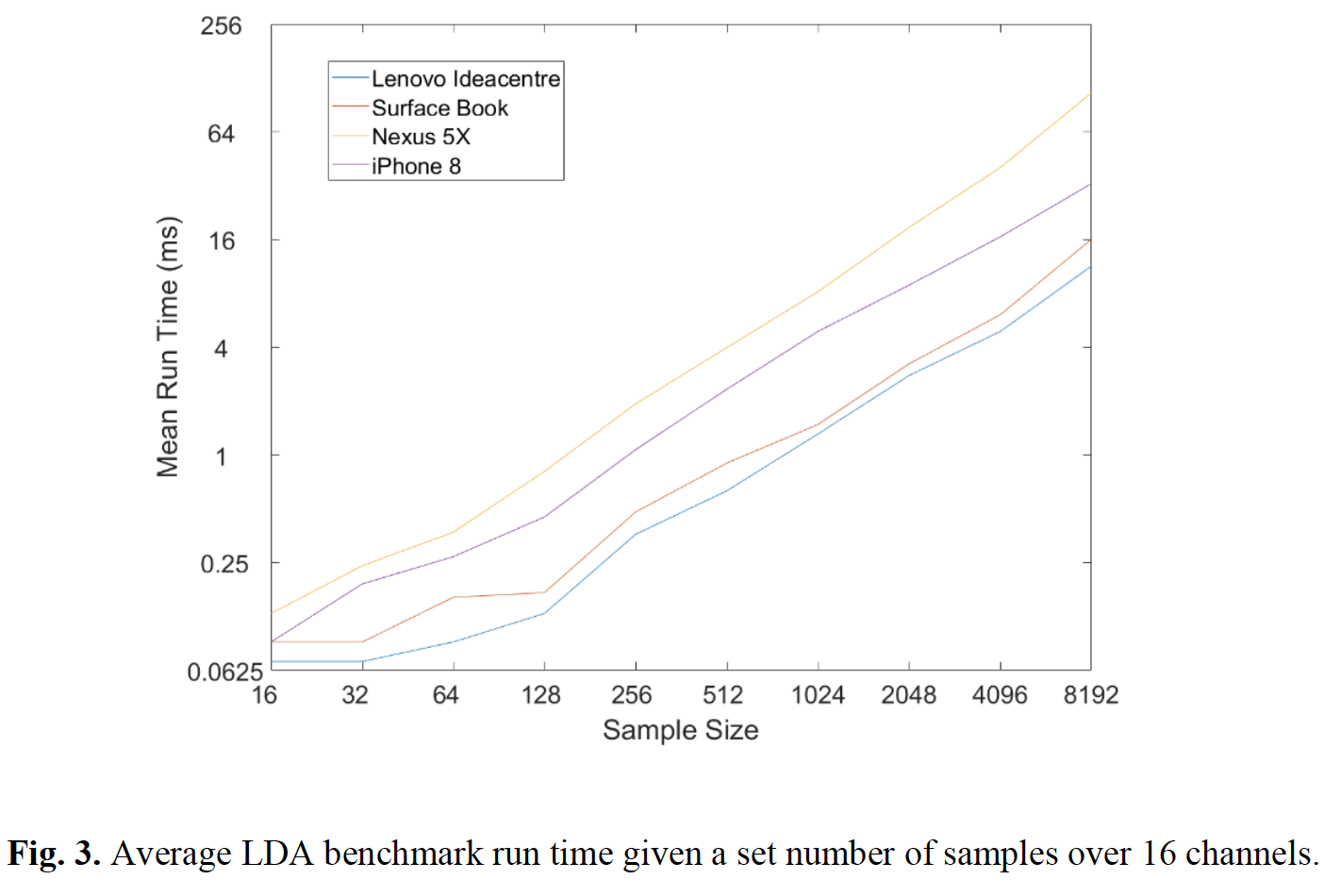

Stegman, P., Crawford, C.S., and Gray, J., (2018). WebBCI: An Electroencephalography Toolkit Built on Modern Web Technologies. HCI International 2018, July 15-20, 2018, Las Vegas, NV, USA. Status: Accepted

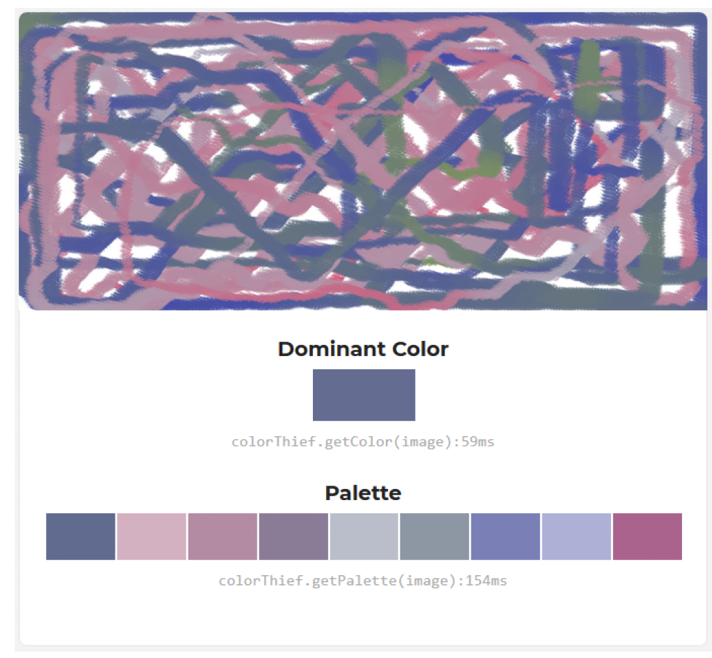

Cioli, N., Holloman, A., and Crawford, C., (2018). NeuroBrush: An Artistic Multi-Modal, Interactive Painting Competition. CHI 18' Artistic BCI Workshop, April 22, 2018, Montreal, QC, Canada. Status: Accepted

Crawford, C.S., Andujar, M., and Gilbert, J.E., (2018). Brain Computer Interface for Novice Programmers. ACM SIGCSE Technical Symposium on Computer Science Education, February 21-24, 2018, Baltimore, MA, USA, pp. 32 -37.

Crawford, C.S., Andujar, M., and Gilbert, J.E., (2017). Neurophysiological Heat Maps for Human-Robot Interaction Evaluation. In Proceedings of 2017 AAAI Fall Symposium Series: Artificial Intelligence for Human-Robot Interaction AAAI Technical Report FS-17-01, November 9-11, 2017, Arlington, VA, USA, pp. 90-93.

Lieblein, R., Hunter, C., Garcia, S., Andujar, M., Crawford, C. S., & Gilbert, J. E. (2017). NeuroSnap: Expressing the User’s Affective State with Facial Filters. In International Conference on Augmented Cognition (pp. 345-353). Springer, Cham.

Crawford, C.S., Andujar, M., Jackson, F., Applyrs, I., & Gilbert, J.E. (2016). Using a Visual Programing Language to Interact with Visualizations of Electroencephalography Signals. In Proceedings of the 2016 American Society for Engineering Education Southeastern Section (ASEE SE), Tuscaloosa, AL, March 13-15, 2016.

Andujar, M., Crawford, C. S., Nijholt, A., Jackson, F., & Gilbert, J. E. (2015). Artistic brain-computer interfaces: the expression and stimulation of the user’s affective state. Brain-Computer Interfaces, 2(2-3), pp. 60–69.

Crawford, C.S., Badea, C., Bailey, S.W., & Gilbert, J.E. (2015). Using Cr-Y Components to Detect Tongue Protrusion Gestures. In Proceedings of the 33rd Annual ACM CHI 2015 Conference Extended Abstracts, pp. 1331-1336, Seoul, Republic of Korea, April 18-23, 2015.

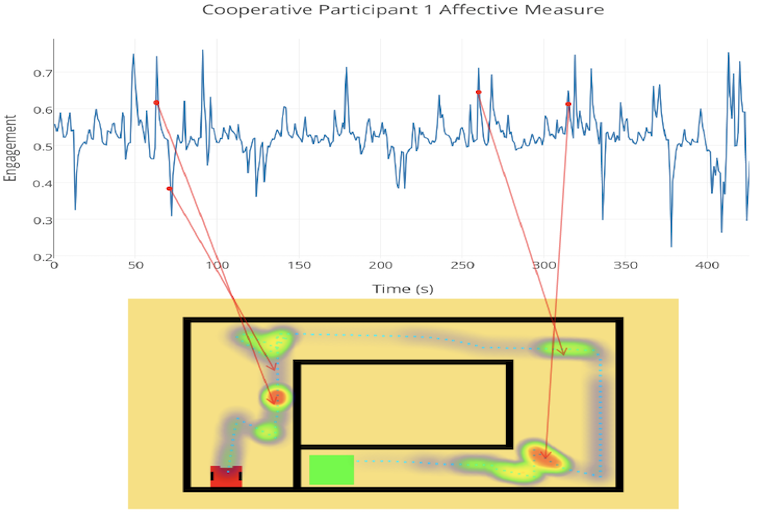

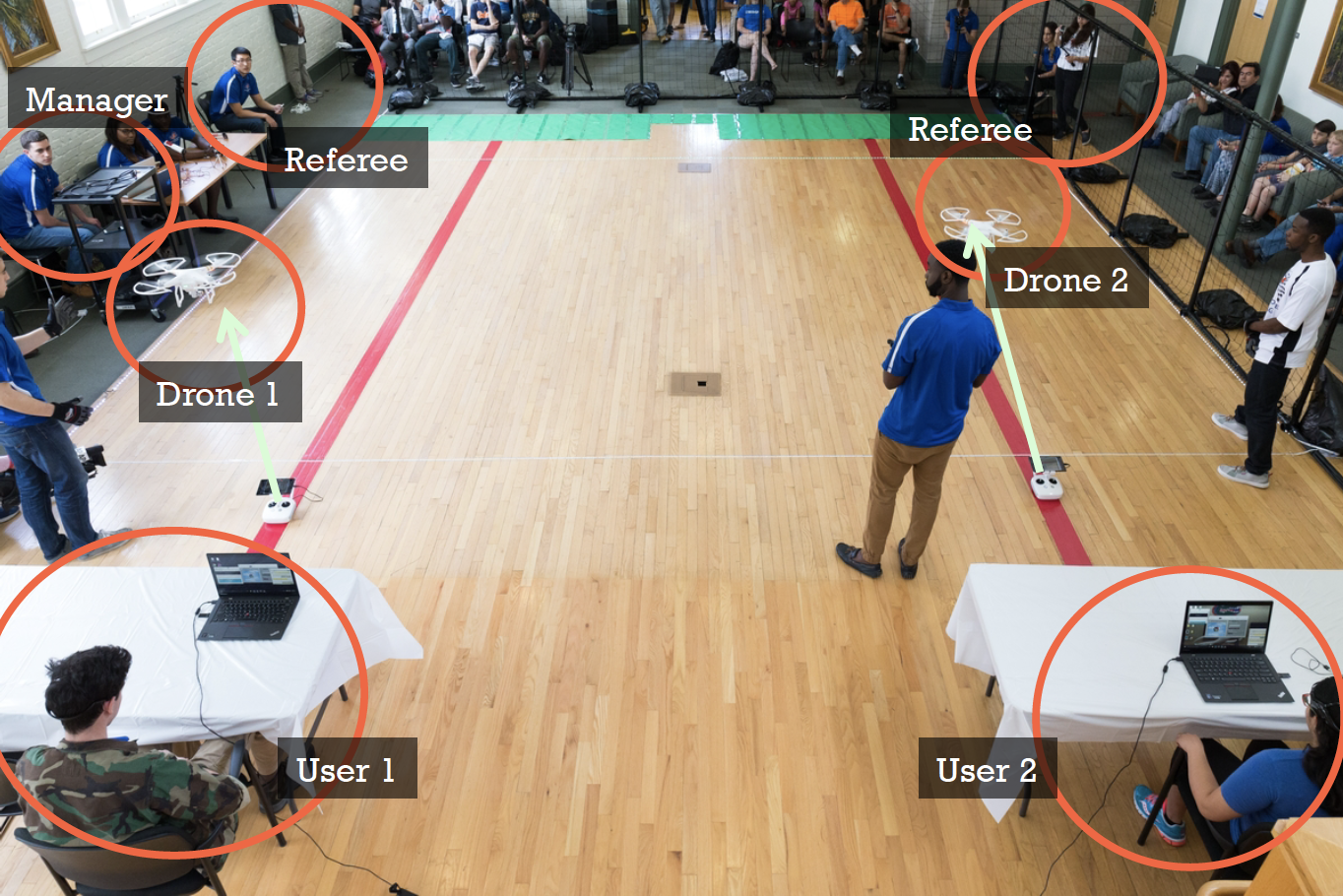

Crawford, C.S. & Gilbert, J.E. (2015). Towards Analyzing Cooperative Brain-Robot Interfaces Through Affective and Subjective Data. In Proceedings of the 10th Annual ACM/IEEE International Conference on Human-Robot Interaction Extended Abstracts pp. 231-232. 2015.

Crawford, C.S., Andujar, M., Jackson, F., Remy, S., & Gilbert, J.E. (2015). User Experience Evaluation Towards Cooperative Brain-Robot Interaction. In Proceedings 17th International Conference Human-Computer Interaction: Design and Evaluation, HCI International 2015, pp. 184–193, Los Angeles, CA, August 2-7, 2015, M. Kurosu (Ed.): Human-Computer Interaction, Part I, Springer LNCS 9169, DOI: 10.1007/978-3-319-20901-2_17.

Crawford, C.S., Andujar M., Remy S., & Gilbert, J.E. (2014). Cloud Infrastructure for Mind-Machine Interface. In Proceedings on the International Conference on Artificial Intelligence (ICAI), pp. 127-133.